A project required that I produced several minutes of talking animations for a virtual spokesperson on a regular basis.

Manually animating this was out of the question, as was routine performance capture, so I decided I would hack together my own set up to, at best, dynamically generate finished animation, or at worst, cut down production time.

How does one build a Talking Head System?

The system was designed around the idea of speech frequency, depending on the rate at which the person was talking, it would dynamically blend between different animations, pausing and speeding up as the speaker did when they recorded their audio.

If done well, this could generate Talking animations for a character using any audio clip in realtime.

Naturally, this was easier said than done.

The first step was to gather a lot of motion capture. Using Rokoko Smartsuit, I recorded somewhere around 15 minutes of motion capture, sitting in a chair and talking. This was the fun part.

Afterwards, I isolated parts of the data into loopable animation clips, cleaning up the data where needed. Outside of a couple of distinct hand gestures, there wasn't actually much noticable variety to the movements (which is very for good what I was trying to do).

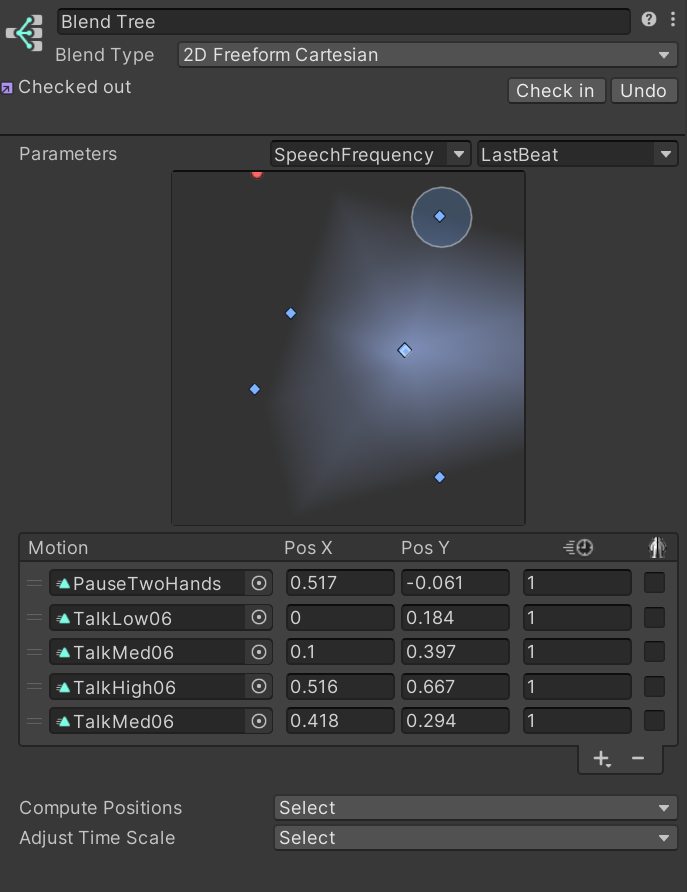

Each clip was categorized as Low Frequency, Medium Frequency, High Frequency or Pausing depending on how fast the movements were.

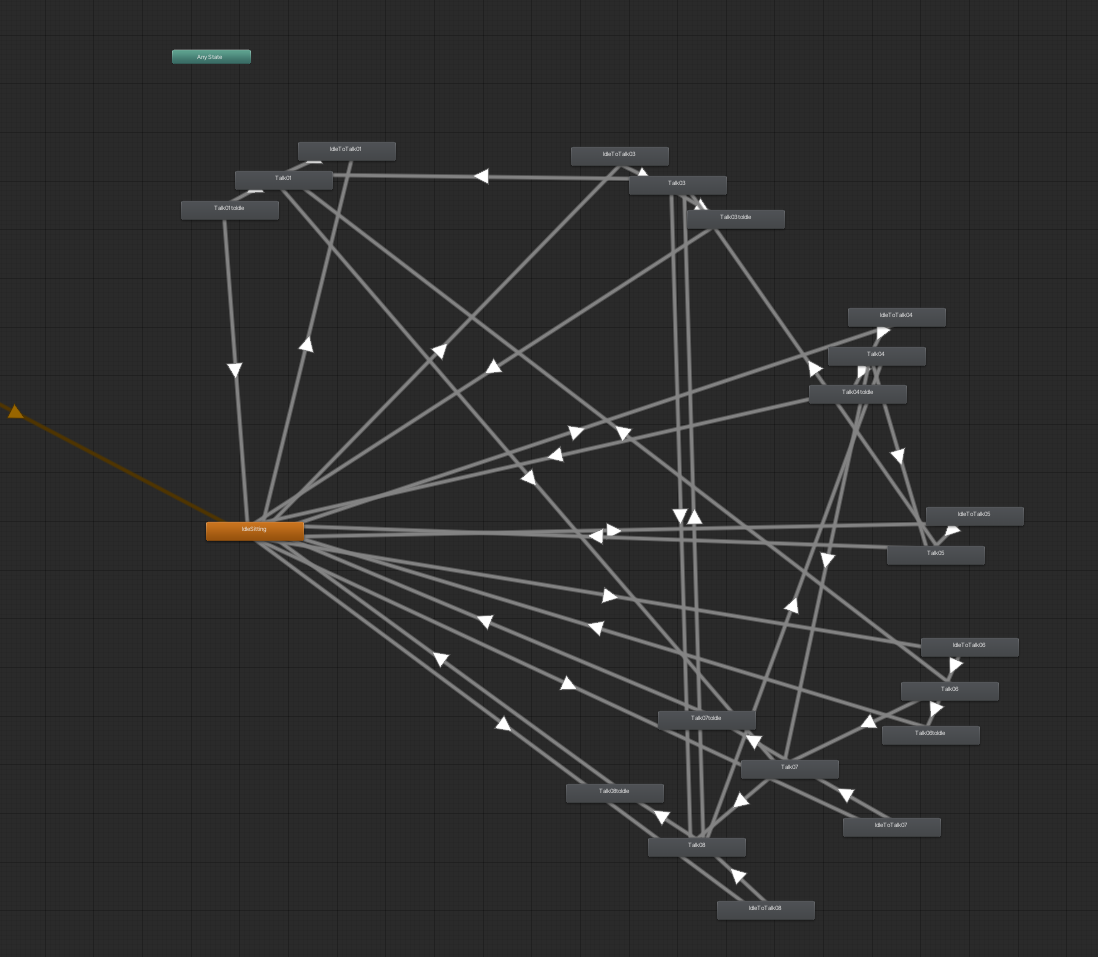

From here an animation controller was created, where blend trees containing the aforementioned clips were assembled. To drive the animations, I needed something that could interpret the audio. Following this tutorial by Renaissance Coders, I was able to extract data from the audio clips:

All the pieces were in place.

Speech is a spectrum

I initially thought that simply mapping the Speech Frequency values to the blend tree would be sufficient. IT WAS NOT. The character was either talking or they weren't, with no room for in-between. Thier hands would drop mid-sentence, only to be picked back up as soon as the speech resumed. It felt robotic.

Speech is a very organic thing, humans are all over the place with our rate of speech, we get excited, we pause, we lose our train of thought only to catch it again... all of that can't be captured linearly. So I shifted my approach.

I introduced another parameter to the Blend Tree: TimetoBeat, which, in terms of the system, keeps track of how long it's been since speech was detected. I mapped this along with Speech Frequency in a Freeform Cartesian Blend Tree, the flexibility of which allowed me to map the different clips to the values non-linearly, faking the illusion of thought and trepidation when the character spoke.

The work wasn't over though, I still had to manually author blend trees for each group of clips, as well as create new animations to transition between them, but it was certainly a start.

The Icing on the cake

Without some form of speech recognition, the animations will always feel somewhat disjointed. I needed a way to tie the audio more closely to the movements, and so I added "emotes" to the system.

Isolating specific gestures from the mocap data, I added them to the controller, using Timeline Signals to mark points in the audio when a corresponding phrase or word was spoken and trigger them so they organically weave in and out of the character's speech.

This lent the system a bit more believability... but I wanted MORE.

I created Blendshapes for the character's eyes, keeping in line with apple's ARKit setup and mapped it to Unity's Face Capture plugin. This tied it all together and allowed the system to take a secondary place, augmenting the performance instead of being the center of it. After all, no amount of automation can beat a good take.

Conclusion

This system was a huge undertaking on my part, I spent just as much time programming as I did authoring keyframes, but I'm happy with the results. It's not without its flaws, requiring a lot of animation data to be convincing among other things but it's already saved me weeks of work.

There's plenty of room for improvement, I'm eager to integrate Unity's procedural Animation Rigging package into this system and see how far I can push it.